Essential Practices for Generative AI Security and Beyond

Speaker

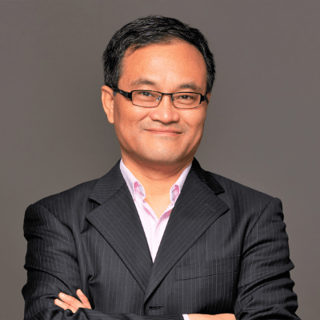

Ken Huang is a prolific author and renowned expert in AI and Web3, with numerous published books spanning AI and Web3 business and technical guides and cutting-edge research. As Co-Chair of the AI Safety Working Groups at the Cloud Security Alliance, and Co-Chair of AI STR Working Group at World Digital Technology Academy under UN Framework, he's at the forefront of shaping AI governance and security standards.

Huang also serves as CEO and Chief AI Officer(CAIO) of DistributedApps.ai, specializing in Generative AI related training and consulting. His expertise is further showcased in his role as a core contributor to OWASP's Top 10 Risks for LLM Applications and his active involvement in the NIST Generative AI Public Working Group.

Key Publications:

- "Beyond AI: ChatGPT, Web3, and the Business Landscape of Tomorrow" (Chief Editor) - Strategic insights on AI and Web3's business impact

- "Generative AI Security: Theories and Practices" (Springer) - A comprehensive guide on securing generative AI systems

- "Practical Guide for AI Engineers" (Volumes 1 and 2) - Essential resources for AI practitioners

- "AI Ascension: A Story of Fiction and Reality" - Exploring AI's impact through narrative

- "Web3: Blockchain, the New Economy, and the Self-Sovereign Internet" (Upcoming, Cambridge University Press) - Examining the convergence of AI, blockchain, IoT, and emerging technologies

Huang led the development of the "Generative AI Application Security Testing and Validation Standard" for World Digital Technology Academy.

His co-authored work "Blockchain and Web3: Building the Cryptocurrency, Privacy, and Security Foundations of the Metaverse" has been recognized as a must-read by TechTarget in both 2023 and 2024.

A globally sought-after speaker, Ken has presented at prestigious events including Davos WEF, ACM, IEEE, CSA AI Summit, IEEE, ACM, Depository Trust & Clearing Corporation, and World Bank conferences.

SUMMARY

Welcome to "MLSecOps Connect: Ask the Experts," an educational live stream series from the MLSecOps Community where attendees have the opportunity to hear their own questions answered by a variety of insightful guest speakers.

This is a recording of the session we held on September 11, 2024 with Ken Huang, CISSP.

TRANSCRIPT

During this session, Ken answered questions from the MLSecOps Community related to cybersecurity best practices, including questions about themes from the book, "Generative AI Security: Theories and Practices (Future of Business and Finance)."

Explore with us:

- How will the security landscape change in terms of LLMs (large language models) paired with RAGs (retrieval-augmented generation process)?

- In LLM security, excess attention is often given to the surrounding systems, but often not to model security - how does Ken see this?

- How would Ken approach designing a roadmap for larger companies that already have systems in the AI, ML, and RPA (robotic process automation) environment but have not yet dealt with security?

- What are the options to create a hybrid pipeline for machine learning in a multi-cloud environment such as Google Cloud Platform (GCP), Oracle, On-Prem, and Snowflake?

- Where does Ken see AISec responsibility end up in the company's cybersecurity organization, considering it creates multiple data trust boundaries, spans across multiple AI tech stacks, and other data risks, which seems "wider/bigger" than AppSec?

- Which ISO standards for GenAI security are most relevant?

- How should we ensure that open source datasets are safe? What tools can be used to scan open source datasets?

- What are the new emerging trends in exploiting machine learning models?

- Dual use: what are some generative AI tools for cybersecurity?

- What are the key security considerations for data protection during fine-tuning LLMs and during run-time (inference)?

- Defining CVEs for generative AI (what's the equivalent)?

- Thoughts on adoption of LLM gateways or LLM firewalls.

- What are the areas where generative AI security solutions are needed? What are the biggest challenges in addressing these threats? To what extent is the problem due to governance, internal risk management, and technical responses, respectively?

- Aside from AI security - what is Ken currently working on the most?

Session references & resources (including time stamp from video mention):

(7:50) "Mitigating Security Risks in Retrieval Augmented Generation (RAG) LLM Applications" - Cloud Security Alliance blog piece by Ken Huang.

(10:09) NVIDIA Confidential Computing with Trusted Execution Environment GPUs

(11:27) Books: "Practical Guide for AI Engineers" Volume 1 and Volume 2 by Ken Huang

(13:06) Book: "The Handbook for Chief AI Officers: Leading the AI Revolution in Business" co-authored by Ken Huang.

(20:04) StackGen

(25:10) Cloud Security Alliance AI Organizational Responsibilities Working Group: Defining industry roles for AI security teams, adapting to evolving challenges, and staying ahead of AI.

(26:24) OWASP Top 10 for Large Language Model Applications

(26:43) World Digital Technology Academy: "WDTA is an international NGO established in Geneva, under the guidance of the United Nations framework."

(26:55) Cloud Security Alliance AI Safety Initiative

(35:05) Dropzone AI: Pretrained AI SOC Analyst autonomous agent

(41:46) Book: "Beyond AI: ChatGPT, Web3, and the Business Landscape of Tomorrow" (Future of Business and Finance) co-authored by Ken Huang

(42:37) EU AI Act - MLSecOps Podcast with David Rosenthal discussing (among many other things) the categories of risk outlined in the Act. "Exploring Generative AI Risk Assessment and Regulatory Compliance"

(44:13) EC-Council Course (6 hours): "Generative AI for Cybersecurity"

(48:38) (Regarding question about Gen AI CVEs)

CVE® Program Mission Identify, define, and catalog publicly disclosed cybersecurity vulnerabilities.